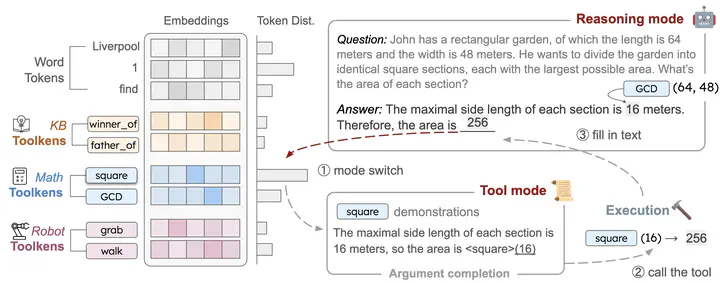

ToolkenGPT framework from original paper

ToolkenGPT framework from original paper

This project was done as an graduate project, in the Fall '24 term at UC San Diego under Prof. Lian Hui Qin, Department of Computer Science and Engineering, UC San Diego.

This project explored the capabilities of ToolkenGPT, a modular framework for tool-augmented language models, by integrating it with smaller, modern LLMs like Llama 3.2. My focus was on enabling multi-task learning within this architecture, i.e., training the model to handle diverse tasks like numerical reasoning and knowledge-based QA simultaneously.

Project Highlights

- Framework Re-implementation: Adapted ToolkenGPT to work with Hugging Face’s Transformer-based Llama models. This required significant changes to the training, model, and inference pipelines to ensure compatibility and extensibility.

- Multi-task Learning Extension: Designed and implemented a new multitask training pipeline and model (

MultiTaskFunctionLM) to support concurrent learning across multiple tasks. - Experimental Research: Conducted experiments to evaluate task synergy and performance trade-offs in multi-task setups. This included analyzing the effect of joint-task training strategies.

- Evaluation & Benchmarking: Developed custom evaluation scripts for benchmark datasets such as GSM8K, FuncQA, and KAMEL, measuring model generalization and reasoning performance.

Key Contributions:

- Rewrote core training and inference modules to support Hugging Face models (

train_llama.py,inference_llama.py,model.py) - Introduced a multitask model and training script (

multitask_model.py,train_llama_multitask.py) - Built dataset converters and evaluators for task-specific benchmarks (

convert_data.py,eval_gsm8k_funcqa.py, etc.)

Note: The multitask model is still under development and currently supports inference on only the primary task in a multi-task setting due to architectural limitations.

Takeaway:

This project provided hands-on experience in deep model engineering, multi-task learning, and LLM fine-tuning, while demonstrating how tool-augmented models can scale to support flexible reasoning across diverse tasks.

Attributions:

- Image source: ToolkenGPT by Hao et al.